Team:ETH Zurich/Tools/Automated Wiki

From 2008.igem.org

(→User Interface) |

|||

| (3 intermediate revisions not shown) | |||

| Line 50: | Line 50: | ||

*"What you see is what you get" editor. | *"What you see is what you get" editor. | ||

** Drag and drop available | ** Drag and drop available | ||

| - | ** Image editing in place ( | + | ** Image editing in place (drag and drop or via properties dialog) |

** Formating via pointing | ** Formating via pointing | ||

*Pasting and converting text from Word or HTML pages! | *Pasting and converting text from Word or HTML pages! | ||

| Line 59: | Line 59: | ||

=== Parsing Sites === | === Parsing Sites === | ||

| - | + | At this point we stopped developing the automated wiki. This part is mainly responsible for adding the layout to the text.<br> | |

| - | To achieve this, a layout | + | To achieve this, a layout consisting of a regular html page is read out of the database containing various parsing tags.<br> |

For every parsing tag the program then enters the correct page content.<br> | For every parsing tag the program then enters the correct page content.<br> | ||

| - | + | That way, the menu or a layout image that is placed on every page can be edited in one place and then updated on every other page.<br> | |

| - | + | Our plan was to also parse the text for key words like e.g papers etc. and automatically create the correct links on the wiki. | |

=== Writing into MIT Wiki === | === Writing into MIT Wiki === | ||

| Line 75: | Line 75: | ||

== Why we didn't use it in the end == | == Why we didn't use it in the end == | ||

There were two reasons why we didn't use the automated wiki in the end: | There were two reasons why we didn't use the automated wiki in the end: | ||

| - | *Within the team not everyone felt | + | *Within the team not everyone felt comfortable with the idea that we need an extra tool for editing the wiki, because this also brings an additional source for errors/complications. |

*For some reason, the MIT wiki team decided to loosen up the allowed syntax again, so now we are back at the situation that everything - from html to javascript and also flash - is allowed. Along with these changes, also the main reason why we introduced the automated wiki in the first place was gone... | *For some reason, the MIT wiki team decided to loosen up the allowed syntax again, so now we are back at the situation that everything - from html to javascript and also flash - is allowed. Along with these changes, also the main reason why we introduced the automated wiki in the first place was gone... | ||

Latest revision as of 05:39, 30 October 2008

MotivationAt the beginning of our project planning phase we were thinking about how to deal with the wiki.

One major shortcoming showed up when we were browsing the wikis of previous iGEM competitions - a lot of them simply didn't work anymore. The problem is that large parts of the old wikis are stored on external servers - e.g. JavaScript menus that are stored on students' private homepages. As you can see, the .js file is stored on an external server. As soon as this file is gone, the whole wiki page won't work anymore, because the navigation is gone. And if you browse through wikis of even earlier years, you can see that this has already happened to a lot of pages. This year's situationWhen we then started to think of ways how to design the wiki, it turned out that the people responsible for this wiki are aware of the situation - embedding external content into a wiki page did not seem to work anymore. While this solved the problem of missing content, it gave rise to a new problem. Wiki syntax is very simple and therefore easy to handle. While this appears as a benefit when editing pages, it goes along with a lack of flexibility concerning design- or a lot of work if you want to have a nice design anyways. The wiki syntax makes it harder to separate design and content - like it is the case for our wiki page right now.

Since we have a lot of people responsible for the content and only few work on the design, we agreed on editing pages like this:

<!-- PUT THE PAGE CONTENT AFTER THIS LINE. THANKS :) -->

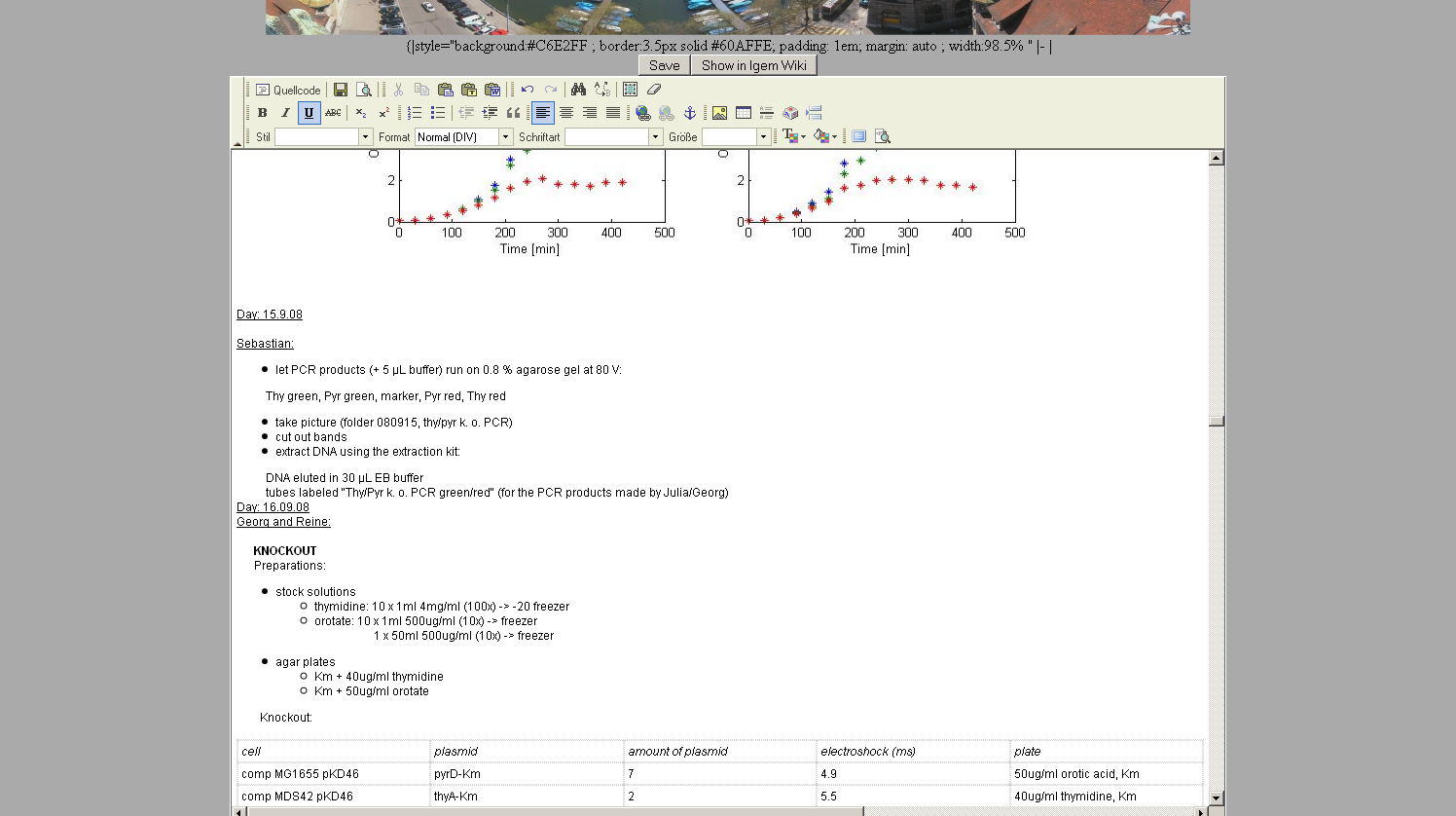

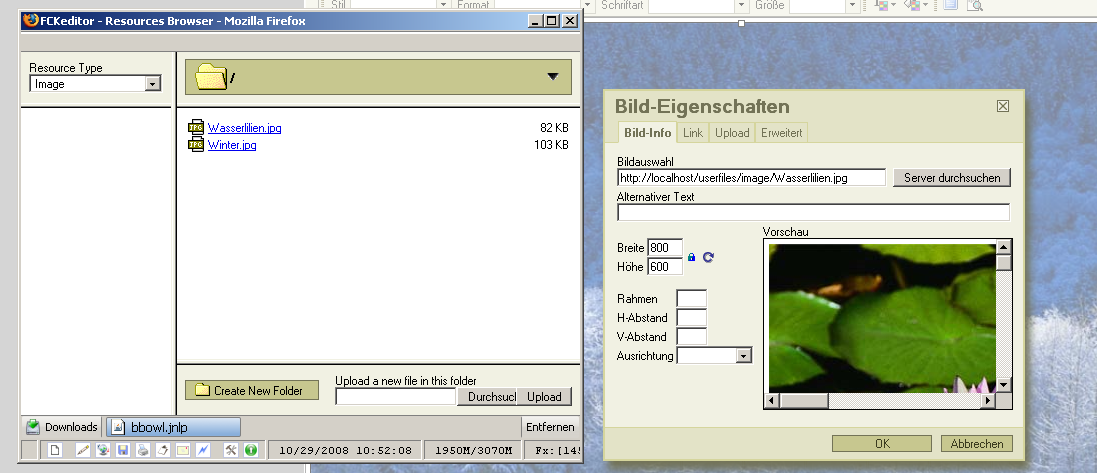

How to overcome this situationWhile the reasons why external content should not be allowed on the wiki were obvious, we still didn't want to settle with "just" using the regular wiki editing. Therefore, we had the idea to use some kind of software to overcome the shortcomings listed above. We wanted to introduce a "middle man" who performs editing tasks for the user and create a division of content and syntax while maintaining wiki only syntax on the MIT wiki site. The automated wikiSoftware / Progamming LanguageThe automated wiki is written in C# as a webapplication that needs an IIS server to run on. User InterfaceThe user interface uses the [http://www.fckeditor.net/demo FCKEditor Tool] as an input interface. You can test it [http://www.fckeditor.net/demo here] and see what it can do.A short list of benefits compared to regular wiki editing:

Parsing SitesAt this point we stopped developing the automated wiki. This part is mainly responsible for adding the layout to the text. Our plan was to also parse the text for key words like e.g papers etc. and automatically create the correct links on the wiki. Writing into MIT WikiFinally after all the pages are generated, those which have been changed get updated at the MIT wiki.To do this the application simply mimics a regular user edit by logging in with a regular user account and editing page per page. To see an example of a page generated with the automated wiki, click here.

Why we didn't use it in the endThere were two reasons why we didn't use the automated wiki in the end:

DownloadMedia:ETH Auto wiki.zip Keep in mind that the development of this tool stopped in the middle of the project. However, you might be able to use some ideas/parts of it. |

"

"