Team:Newcastle University/Original Aims

From 2008.igem.org

| Line 1: | Line 1: | ||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

{{:Team:Newcastle University/Header}} | {{:Team:Newcastle University/Header}} | ||

{{:Team:Newcastle University/Template:UnderTheHome|page-title=[[Team:Newcastle University/Parallel Evolution|Approach]]}} | {{:Team:Newcastle University/Template:UnderTheHome|page-title=[[Team:Newcastle University/Parallel Evolution|Approach]]}} | ||

| + | |||

== Parallel Evolution == | == Parallel Evolution == | ||

| - | |||

| - | |||

| - | |||

| - | + | There standard practice in biology today, synthetic or not, is to treat bioinformatics as any other tool to achieve an end. A problem is determined, and the biologist works out a possible avenue of exploration. Sometimes, this involves the use of bioinformatics tools, such as BLAST searches or phenotypic trees. Once the method of exploration is established, the biologist rarely goes back to bioinformatics approaches to analyze her results. | |

| + | |||

| + | The concept of parallel evolution treats bioinformatics not only as a tool in biology, but as a viable but limited method for exploring a problem. Wet-lab biology is expensive in terms of time, money, and manpower. A single bioinformatician can test the same situation with no equipment other than a computer, and run many iterations of the same experiment within seconds, rather than the weeks that the labs may take. | ||

| + | |||

| + | However, the bioinformatics is only as good as its simulation. The field expands daily with new information, all of which must be incorporated into the simulation in order for it to give useful results. Much of bioinformatics is backed by hard data from wetlabs. Running an experiment 1000 times is only useful if you know what all the variable are, and what they should be. | ||

| + | |||

| + | The most sensible approach to the problem of these different yet complementary methods is to play one off of the other, taking advantage of the strengths while minimizing the limitations. | ||

| + | |||

| + | |||

| + | == Dry-Lab Approach == | ||

| + | |||

| + | The two techniques we decided to use in our bioinformatics approach were neural networks and genetic algorithms. Computer science has consistently looked to Mother Nature for inspiration, and the results are best exemplified with these two techniques. | ||

| + | |||

| + | [[Image:Neuron.png|right|thumbnail|A quick sketch of the anatomy and connection of neurons]] | ||

| + | Neural networks are a method of machine learning based on neurons in the brain. In a neuron, signals are received at the dendrites. If the combined triggering impulse is higher than a certain threshold, the neuron fires. The impulse travels down the cell membrane of the axon and is transmitted via the axon terminators to the dendrites of the next neuron. When the neuronic pathway is traveled often, the connections are reinforced. The axon of the preceeding neuron and the dendrites of the subsequent neuron grow to be more highly connected. It is in this way that we learn. and why we can change behaviour patterns once they are established. Forcing the impulse to take another route results in an altered behaviour or thought. | ||

| - | '' | + | This same approach is used to teach a computer how to 'think' about a problem. The neurons in the system become nodes. The nodes are arranged in several layers. "Impulses" begin at the input layer, travel through any number of hidden layers, and terminate at the output layer. The output layer gives the computer its learned behaviour. |

| + | Nodes can be highly interconnected. The connections themselves have a weight, which corresponds to the number of axon-dendrite connections in neurons. Nodes that are connected with a heigher weight are more likely to fire. | ||

| - | + | Neural networks must be trained in order to function properly. Untrained neural networks are like newborn babies: cute, but unable to perform any higher functions. They must be taught. | |

| - | + | ||

| - | + | ||

Revision as of 23:23, 26 August 2008

Newcastle University

GOLD MEDAL WINNER 2008

| Home | Team | Original Aims | Software | Modelling | Proof of Concept Brick | Wet Lab | Conclusions |

|---|

Parallel Evolution

There standard practice in biology today, synthetic or not, is to treat bioinformatics as any other tool to achieve an end. A problem is determined, and the biologist works out a possible avenue of exploration. Sometimes, this involves the use of bioinformatics tools, such as BLAST searches or phenotypic trees. Once the method of exploration is established, the biologist rarely goes back to bioinformatics approaches to analyze her results.

The concept of parallel evolution treats bioinformatics not only as a tool in biology, but as a viable but limited method for exploring a problem. Wet-lab biology is expensive in terms of time, money, and manpower. A single bioinformatician can test the same situation with no equipment other than a computer, and run many iterations of the same experiment within seconds, rather than the weeks that the labs may take.

However, the bioinformatics is only as good as its simulation. The field expands daily with new information, all of which must be incorporated into the simulation in order for it to give useful results. Much of bioinformatics is backed by hard data from wetlabs. Running an experiment 1000 times is only useful if you know what all the variable are, and what they should be.

The most sensible approach to the problem of these different yet complementary methods is to play one off of the other, taking advantage of the strengths while minimizing the limitations.

Dry-Lab Approach

The two techniques we decided to use in our bioinformatics approach were neural networks and genetic algorithms. Computer science has consistently looked to Mother Nature for inspiration, and the results are best exemplified with these two techniques.

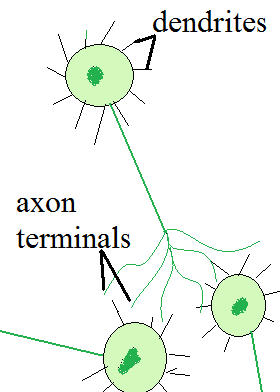

Neural networks are a method of machine learning based on neurons in the brain. In a neuron, signals are received at the dendrites. If the combined triggering impulse is higher than a certain threshold, the neuron fires. The impulse travels down the cell membrane of the axon and is transmitted via the axon terminators to the dendrites of the next neuron. When the neuronic pathway is traveled often, the connections are reinforced. The axon of the preceeding neuron and the dendrites of the subsequent neuron grow to be more highly connected. It is in this way that we learn. and why we can change behaviour patterns once they are established. Forcing the impulse to take another route results in an altered behaviour or thought.

This same approach is used to teach a computer how to 'think' about a problem. The neurons in the system become nodes. The nodes are arranged in several layers. "Impulses" begin at the input layer, travel through any number of hidden layers, and terminate at the output layer. The output layer gives the computer its learned behaviour.

Nodes can be highly interconnected. The connections themselves have a weight, which corresponds to the number of axon-dendrite connections in neurons. Nodes that are connected with a heigher weight are more likely to fire.

Neural networks must be trained in order to function properly. Untrained neural networks are like newborn babies: cute, but unable to perform any higher functions. They must be taught.

"

"